In this blog post, you will find a step-by-step guide on how to generate and use the deployment settings file (DSF) in your Azure DevOps release pipeline when deploying Power Platform solutions to downstream environments. The ultimate goal is to create optimal and healthy ALM for your Power Platform and Dynamics 365 CRM solutions. If you have not read part 1 and part 2 yet, I recommend doing so before proceeding.

This guide will solely focus on how to manually generate and use the DSF within your release pipelines to achieve a fully automated CI/CD scenario. It will not cover the setup of pipelines. It will also not cover how you automatically can generate the DSF and dynamically set the values, I am quite sure it is possible, but it is not something I have tried yet. I hope that I soon can find time to look into it and make a blog post about how to do it automatically.

Step 1: Ensure your connections are active/connected in the target environments, otherwise create them

First, you need to ensure that the connections used in your solution exist in the target environments to which you wish to deploy. For example, if your solution includes Cloud Flows that use the Outlook connector, you must make sure the connector is set up in both the UAT and PROD environments, if those are your intended deployment targets. To verify if the specific connector exists in a given environment, access the environment at make.powerapps.com and open the "Connections" section in the left navigation menu. If the connector is already present, you can choose to use it (make sure it shows 'Connected' in the Status), otherwise, you will need to update it or create a new one.

Note: It is very recommended that you create the connections through a service account so that the connector is not linked to your personal account. Moreover, it is also very important to share the connector with the Application User (Service Principal/client secret), if you use it in your release pipeline to authenticate with your Power Platform target environment.

Step 2: Export your solution and generate your deployment settings file using Power Platform CLI

After confirming that your connections are either established or connected in the target environments, you can now export your solution from the DEV environment and generate the DSF using the Power Platform CLI.

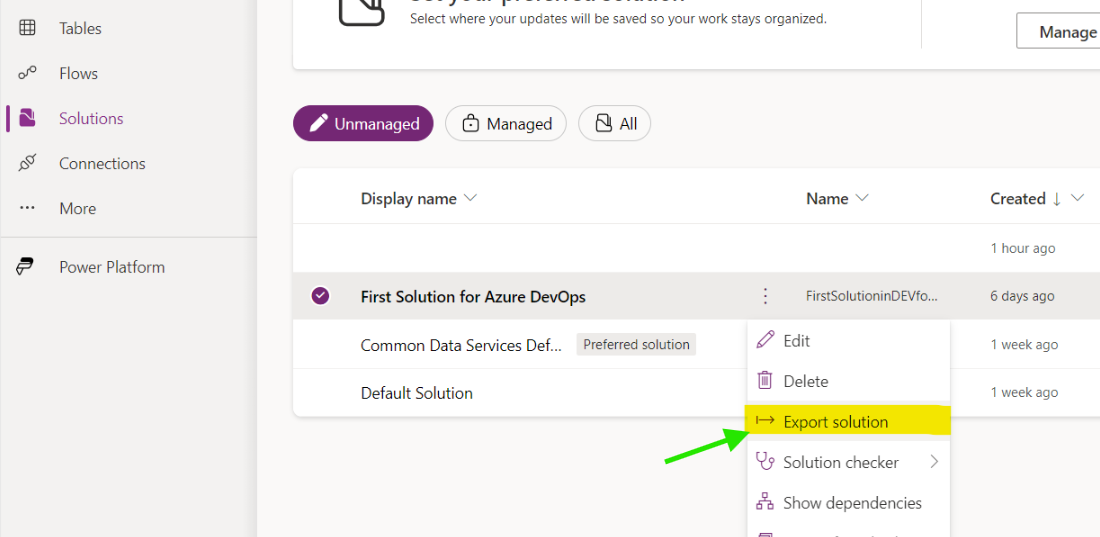

The first step is to access your solutions in the DEV environment. Next, click on the three dots next to the solution you wish to deploy and then click on 'Export solution' as shown below.

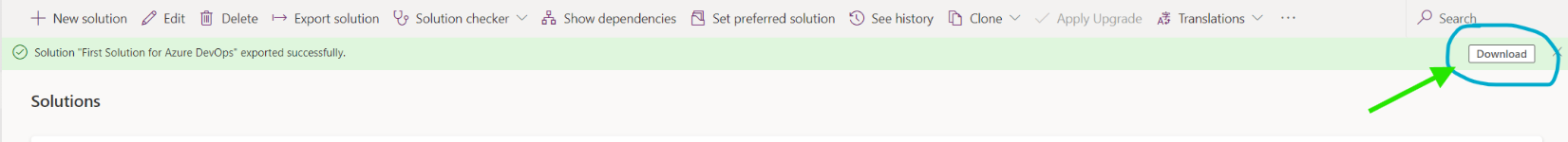

Click 'Next', select 'Unmanaged', and then click 'Export'. Once your solution has finished exporting, you will have the option to download it as shown below. This will download your solution as a zip folder.

Now it is time to generate the deployments settings file. In this guide, I will use Visual Studio Code (VS Code) together with Microsoft Power Platform CLI. If you have not installed the Power Platform CLI, here is a guide from Microsoft to how you can install the CLI to use it with VS Code: https://learn.microsoft.com/en-us/power-platform/developer/howto/install-vs-code-extension.

You can also check whether Power Platform CLI is installed by typing the following command in the VS Code terminal:

Get-Command pac | Format-ListIf you have installed, the results should look like this:

Name : pac.exe

CommandType : Application

Definition : C:\Users\you\.dotnet\tools\pac.exe

Extension : .exe

Path : C:\Users\you\.dotnet\tools\pac.exe

FileVersionInfo : File: C:\Users\you\.dotnet\tools\pac.exe

InternalName: pac.dll

OriginalFilename: pac.dll

FileVersion: 1.29.11

FileDescription: Microsoft Power Platform CLI

Product: Microsoft Power Platform©

ProductVersion: 1.29.11+g9e2b163

Debug: False

Patched: False

PreRelease: False

PrivateBuild: False

SpecialBuild: False

Language: Language NeutralIf you have not installed it, you will get an error like this:

Get-Command: The term 'pac' is not recognized as a name of a cmdlet, function, script file, or executable program.

Check the spelling of the name, or if a path was included, verify that the path is correct and try again.Once you have installed the Power Platform CLI, you can type `Pac` which will give you a list of the different commands and operations you can perform using the Power Platform CLI. Just to give you a feeling of what is possible 😄

In this case, we will use the `pac solution create-settings` command. With this you need to specify the location of your solution zip and the name of the DSF which is a JSON file, so the command would look like this:

pac solution create-settings --solution-zip "C:\Users\dk2380\Downloads\FirstSolutioninDEVforAzureDevOps_1_0_0_1.zip" --settings-file DeploymentSettingsFile_Template_FirstSolutioninDEV.jsonAfter executing the command, it will extract the connection references and/or environment variables from your solution and generate the DSF in a JSON format, as shown below:

{

"EnvironmentVariables": [

{

"SchemaName": "sy_APIKEY",

"Value": ""

}

],

"ConnectionReferences": [

{

"LogicalName": "sy_sharedcommondataserviceforapps_c4866",

"ConnectionId": "",

"ConnectorId": "/providers/Microsoft.PowerApps/apis/shared_commondataserviceforapps"

},

{

"LogicalName": "sy_sharedoffice365_7c062",

"ConnectionId": "",

"ConnectorId": "/providers/Microsoft.PowerApps/apis/shared_office365"

},

{

"LogicalName": "sy_sharedoutlook_318dd",

"ConnectionId": "",

"ConnectorId": "/providers/Microsoft.PowerApps/apis/shared_outlook"

}

]

}As you can see, the example here includes a single environment variable and three connection references, which have been added to the generated DSF. You will also notice that the "Value" field for the environment variable is empty, and the same with the "ConnectionId" field for the connection references. These fields need to be filled in. The "ConnectionId" field ensures that your connection references in the specific environment point to the correct connections within the same environment. This means you will need to create a separate DSF for each target environment — resulting in two DSFs in total for your solution if you have both UAT and PROD environments. The DSF generated initially using the CLI can be used as your template.

Note: If you later add additional environment variables and/or connection references to your solution, it's important to regenerate the file to include the new additions.

Step 3: Fill out the values in the deployment settings file

Now that you have your DSF template with empty values, the next step is to populate these for both UAT and PROD environments. For environment variables of the text data type, you can directly add the hardcoded values. For connection references, you will need the IDs of the connections. In the DSF example above, we have three connection references: Dataverse (CDS), Office 365, and Outlook.

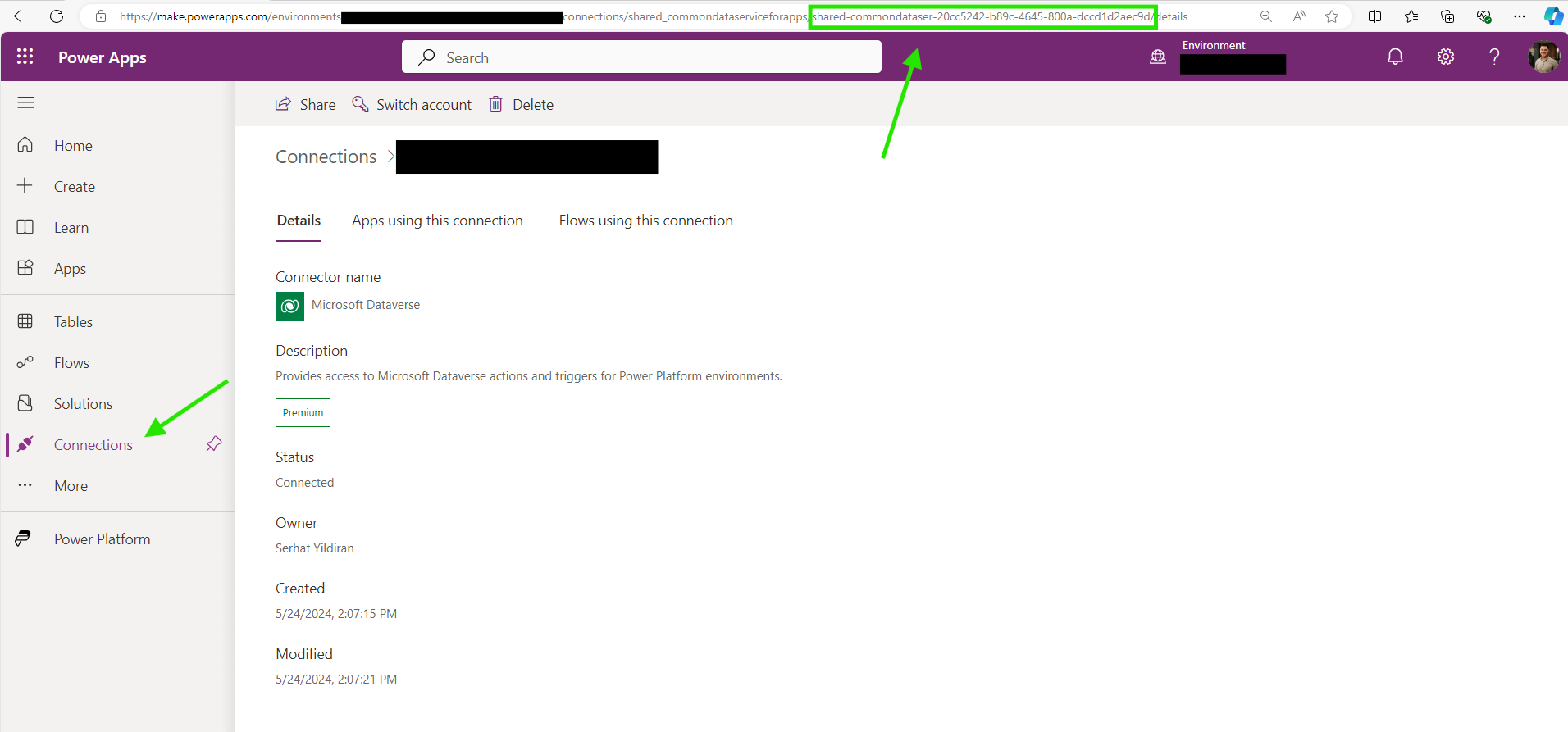

- To find the IDs, access the first downstream environment, such as UAT, by visiting make.powerapps.com and opening the "Connections" section in the left navigation menu.

- Select the connection you need the ID for (ensure the connector is active/connected), and you will find the ID in the URL. Copy this ID and paste it into the ConnectionId field for the corresponding connection reference.

- Repeat this process for the remaining connection references in UAT, then do the same for PROD.

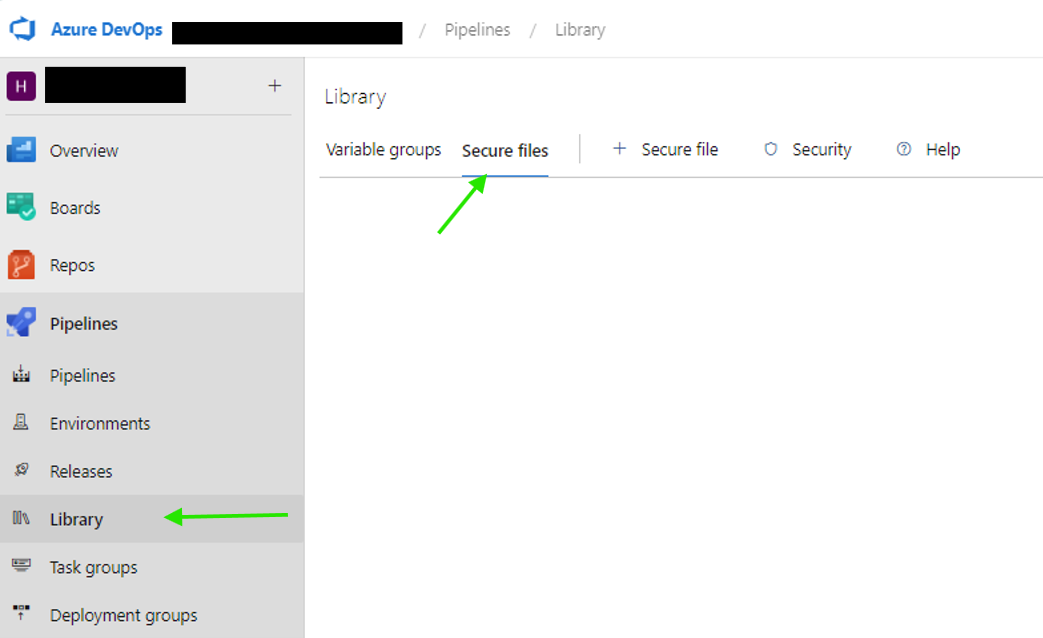

Step 4: Upload your deployments settings file for UAT and PROD to pipeline library as secure files in Azure DevOps

After preparing your DSF files for UAT and PROD, the next step is to add them as secure files in Azure DevOps, so they can be used in your release pipelines during deployment.

- Go to your project in Azure DevOps, navigate to the Library under the Pipelines section, and click on the Secure Files tab.

- Click "+ Secure File" and upload your newly created DSF files.

- If you’re building your pipelines using YAML, after uploading the secure files (DSF), you need to ensure that your pipeline has permission to use them. However, if you are using the classic pipeline builder, as I am in this example, you do not need to manually open access. To grant access, follow these steps:

- Click on your secure file.

- Select "Pipeline Permissions."

- Click the three dots and choose "Open Access."

- Repeat this process for your other DSF file.

Step 5: Use the deployments settings file in your release pipelines.

Now when you have added your DSF to the library in Azure DevOps, you can now use them in your release pipeline.

- Go to your release pipeline and edit it.

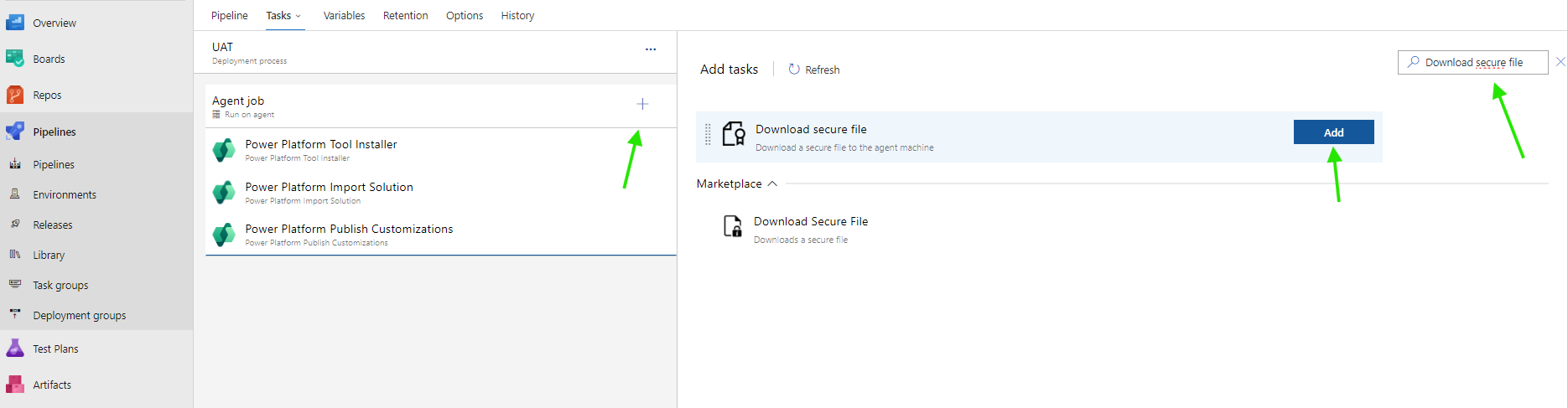

- Add a new task to your stage and search for "Download secure file". Add this task to your stage.

- Make sure to place the task before the task "Power Platform Import Solution".

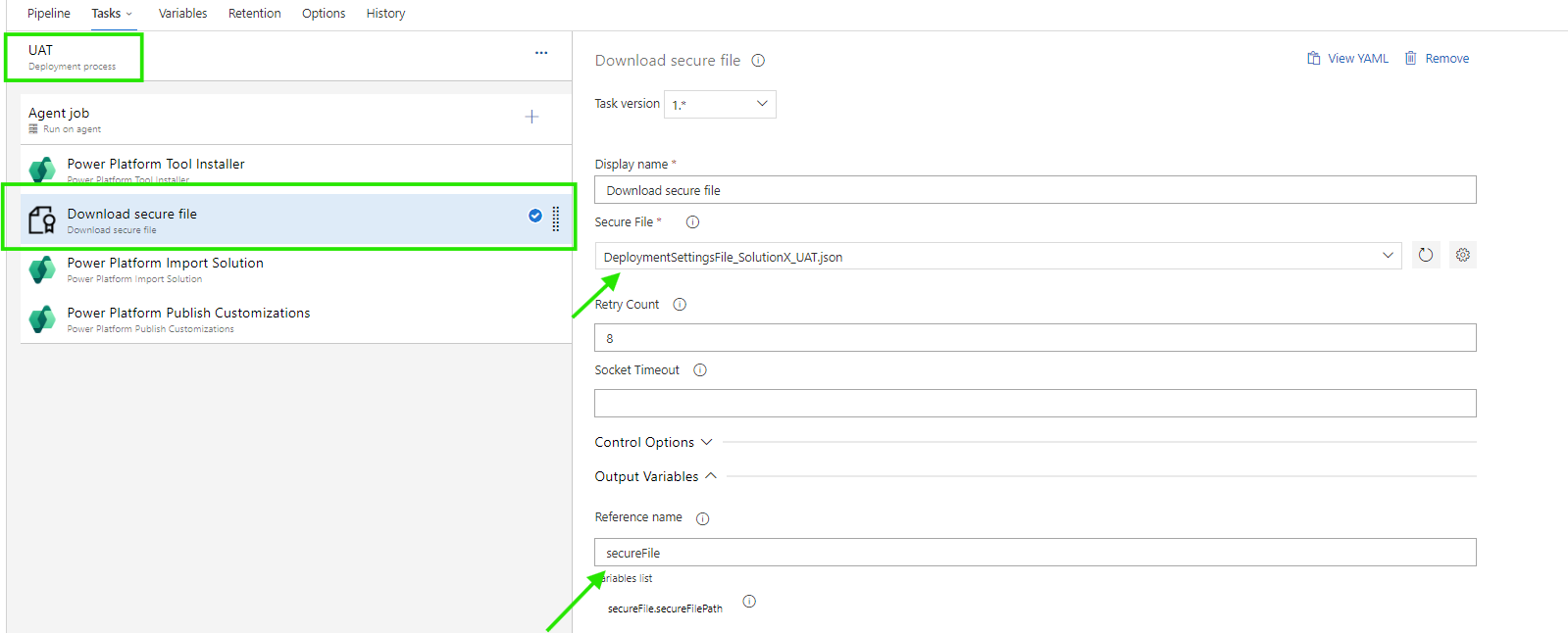

- Select the secure file, in this case, the UAT file, since we are working in the UAT stage. Under Output Variables, enter "secureFile" in the reference name field. This will allow us to reference the secure file in the import solution task.

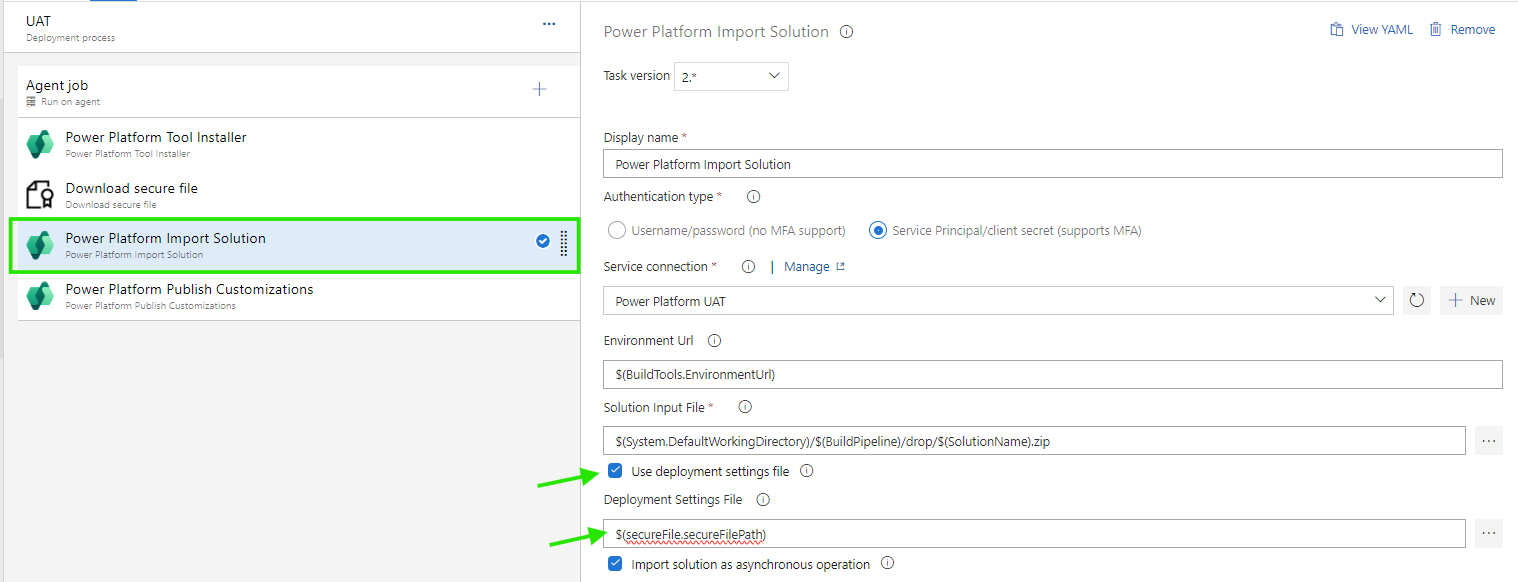

- In the Power Platform Solution task, enable the "Use deployment settings file" option. Then, add $(secureFile.secureFilePath) as the value.

- Repeat this process for the other stages in your release pipeline, and you are all set! 🚀

Conclusion

Thank you for reading this series on using the deployment settings file in Azure DevOps when deploying Power Platform solutions.

This is my first series on a specific topic, and I have to admit, it took quite a bit of time to write and piece together. I have a lot of respect for those in the community who consistently contribute great content. It is not easy, and it definitely takes time.

I hope this series has given you a solid understanding of how to use the deployment settings file in Azure DevOps. While it is not overly complex, it plays a crucial role in establishing a healthy and optimized ALM process for our Power Platform, Dataverse, and Dynamics 365 CRM solutions. Healthy ALM is what we all aim or should aim for!

Feel free to leave a comment below or reach out to me on LinkedIn if you have any questions. See you again soon! 😄

Join the conversation